Your AI Roadmap Is a Fiction

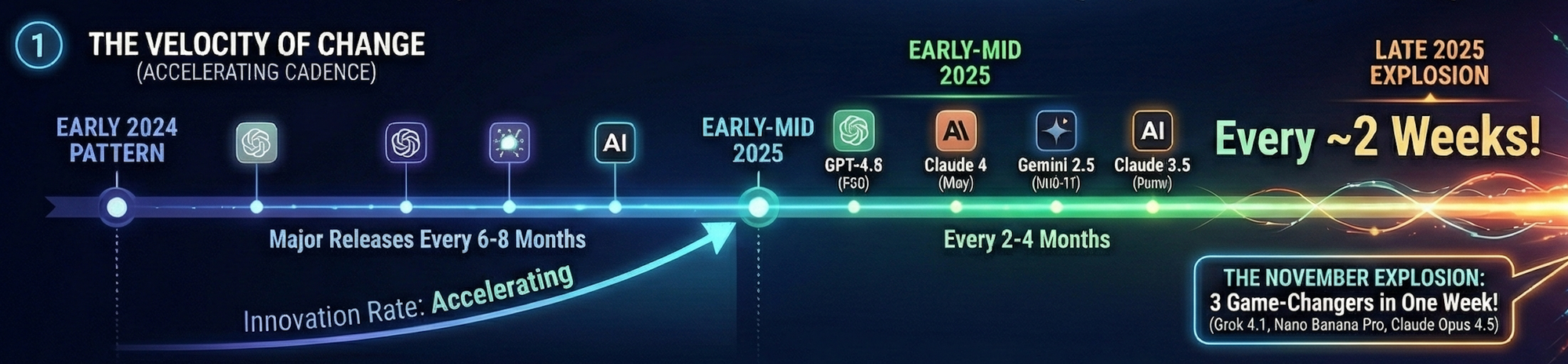

Three major AI breakthroughs launched in November

Not last quarter. Not last year. In one week.

November 17: Grok 4.1 became the most accurate language model ever tested—65% fewer hallucinations than its predecessor.

November 20: Google's Gemini 3 Pro Image finally cracked the "AI can't render text in images" problem that's plagued the field for years.

November 24: Claude Opus 4.5 started outperforming human engineers on coding benchmarks. At 67% lower cost.

One breakthrough every 2-3 days.

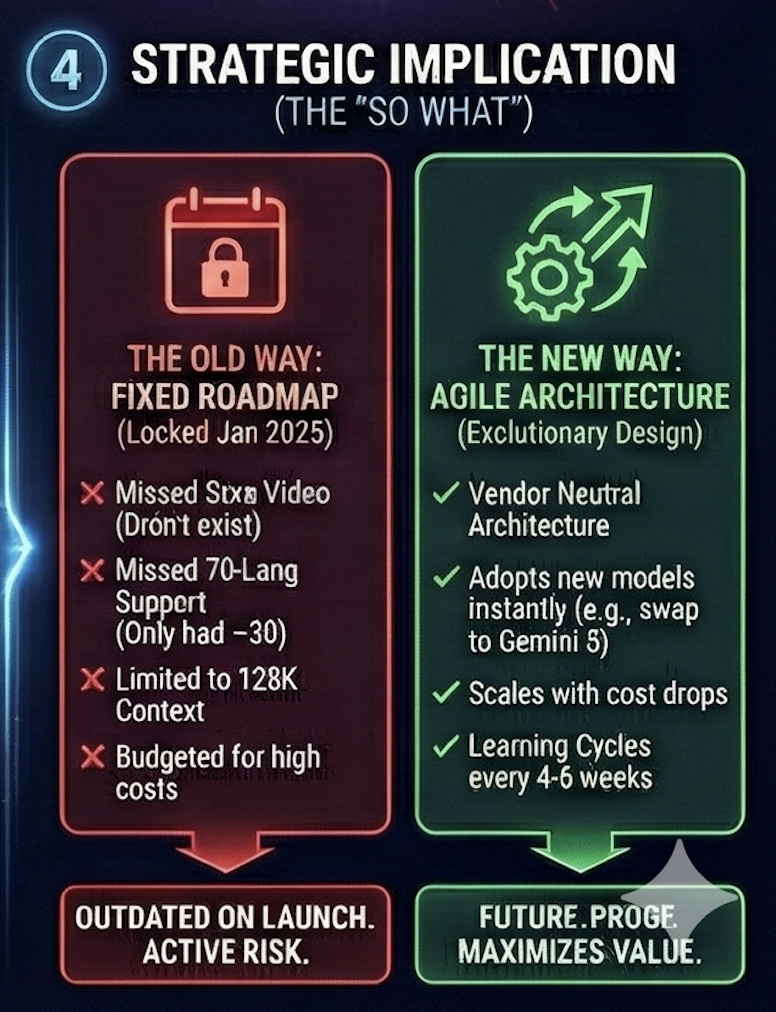

If you're building an AI strategy with a fixed 12-month roadmap, you're not planning for the future.

You're planning for the past.

Watch it on YouTube on Subscribe on Spotify and Apple Podcasts.

The Evidence Is Uncomfortable

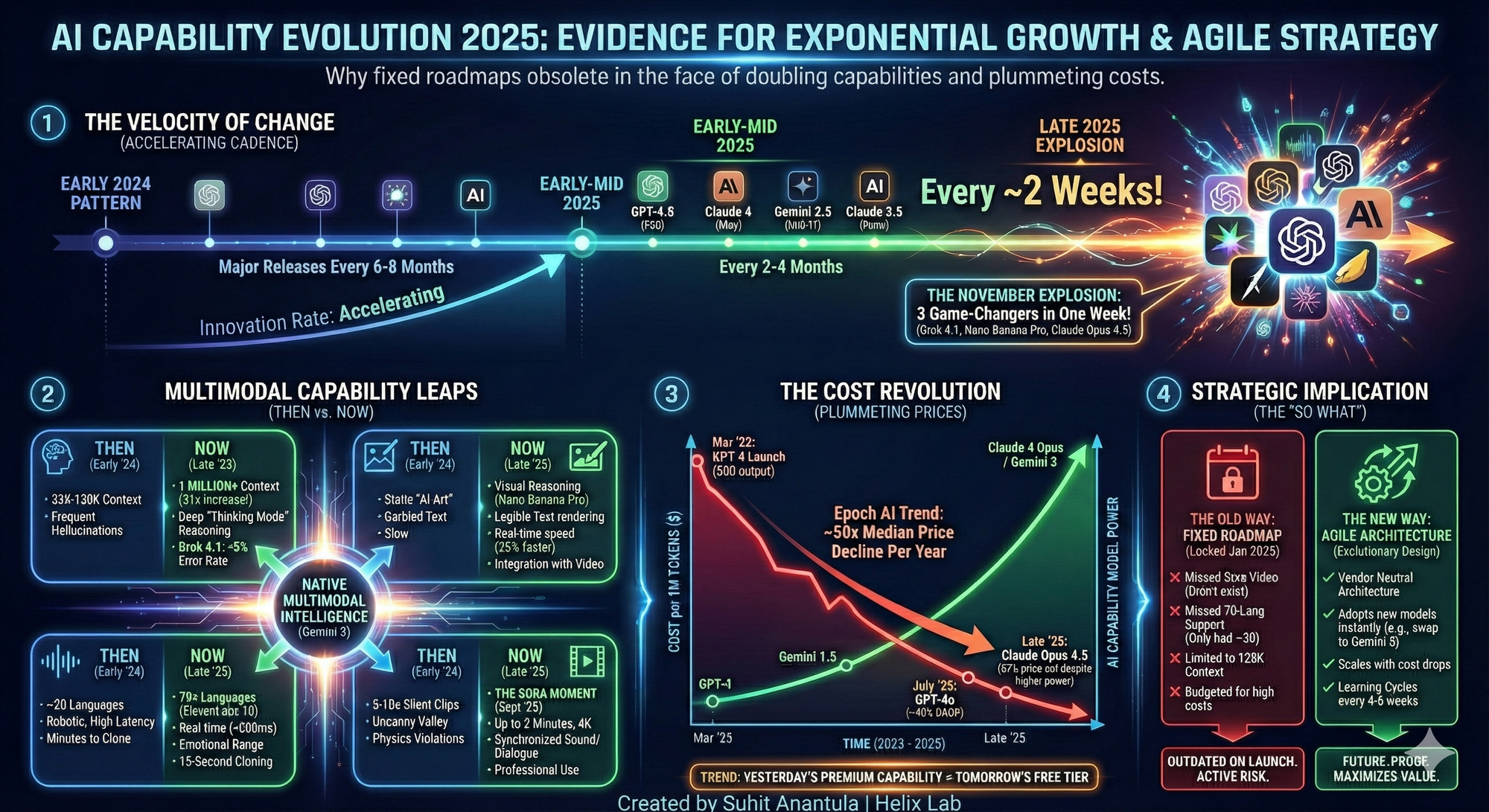

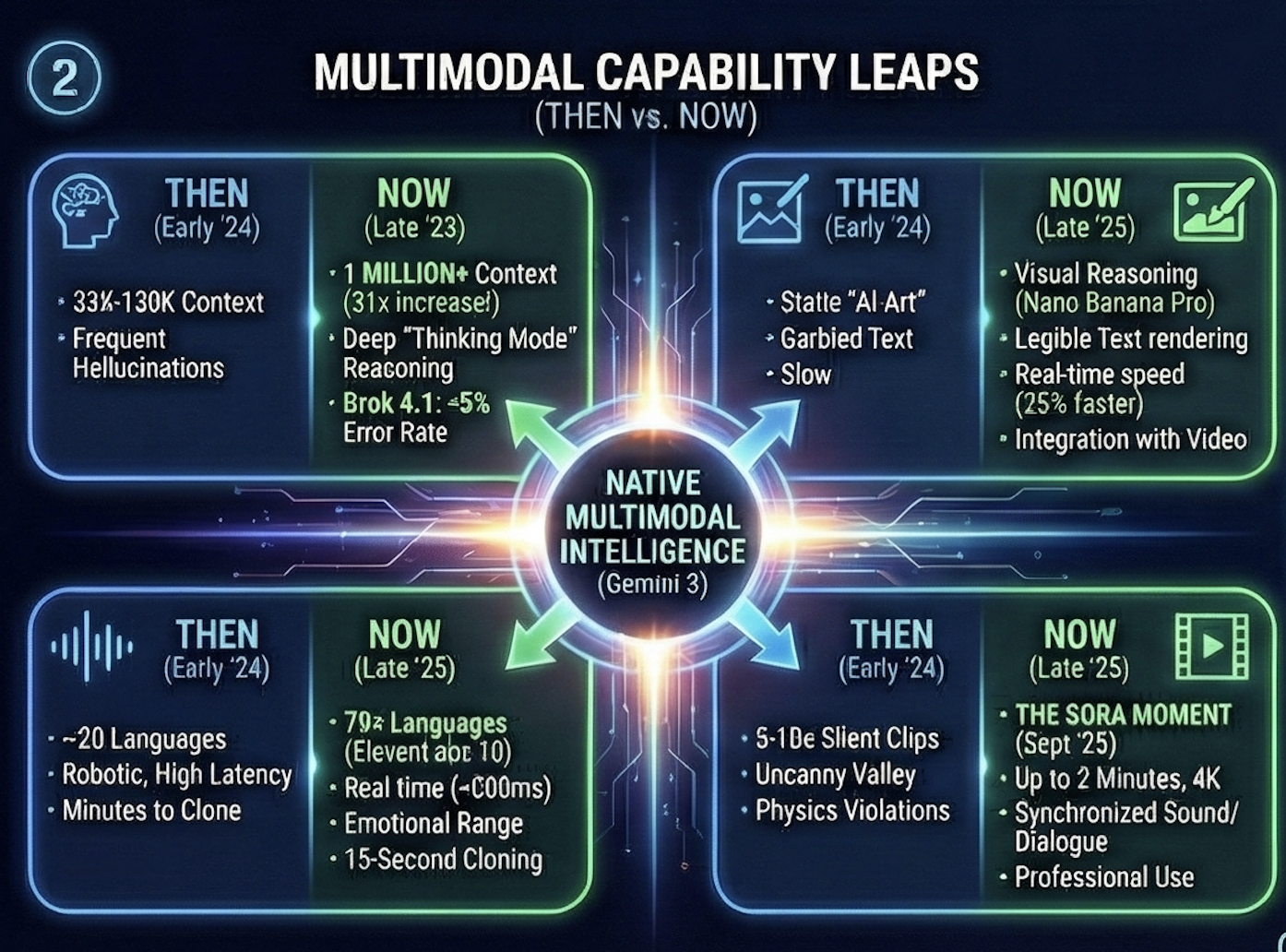

Here's what changed in 10 months:

| Capability | January 2025 | November 2025 |

|---|---|---|

| Context window | 32K-100K tokens | 200K-1M tokens |

| Video generation | Demo quality | Production 4K, 2 minutes |

| Voice languages | ~30 | 70+ |

| Cost (GPT-4 class) | $30/million tokens | $3/million tokens |

That's 10-30x improvement in capability. A 90% drop in cost. In less than a year.

And here's what nobody wants to talk about:

If your organization locked in requirements in January 2025—which is exactly what traditional roadmaps do—you would have missed:

- Video generation that actually works (Sora 2 launched September)

- 70-language voice support (ElevenLabs v3 launched June)

- Million-token context windows (Gemini 2.5 launched mid-year)

- Legible text in AI images (launched last week)

You'd be building to January's capabilities while November's tools sit unused.

Sound familiar?

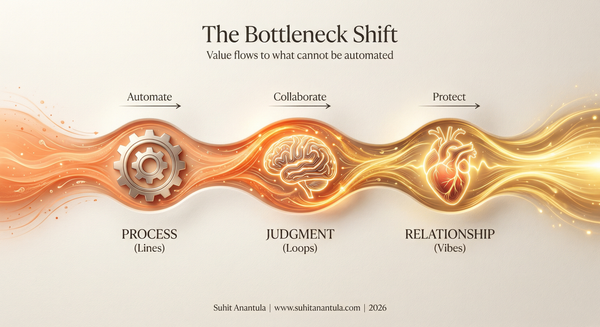

The Real Problem Isn't Speed

Everyone focuses on keeping up with AI releases.

Almost no one focuses on designing systems that don't need to predict them.

Here's what I've learned working with organizations on AI transformation:

The bottleneck isn't AI capability. It's organizational flexibility.

The technology will continue to evolve faster than anyone can predict. Grok 4.2 is expected next month. GPT-5 is coming. Gemini 4 is on the horizon.

You cannot outrun this curve.

But you can design around it.

What Tissue Rejection Looks Like in AI Strategy

I was in a meeting last week where a senior leader asked: "Why can't you just give us a fixed roadmap?"

Here's what I told them:

If we'd locked requirements in January, we'd be launching a product that's already obsolete.

Traditional roadmaps assume the world holds still while you build. That assumption—which was always questionable—is now catastrophically wrong.

The organizational immune system that demands fixed plans creates what I call strategic tissue rejection:

- Planning cycles too slow: Quarterly reviews can't match monthly breakthroughs

- Procurement locks you in: 18-month vendor contracts for 6-month-old technology

- Requirements become constraints: "We scoped for GPT-4" becomes a ceiling, not a floor

- Risk frameworks lag reality: Governance designed for yesterday's capabilities

- Architecture calcifies: Integration points designed for tools that no longer exist

These aren't bugs in your process. They're features of traditional planning.

The same mechanisms that create predictability in stable environments become innovation killers when the ground shifts monthly.

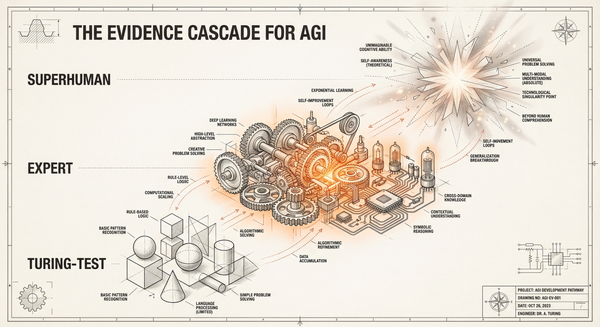

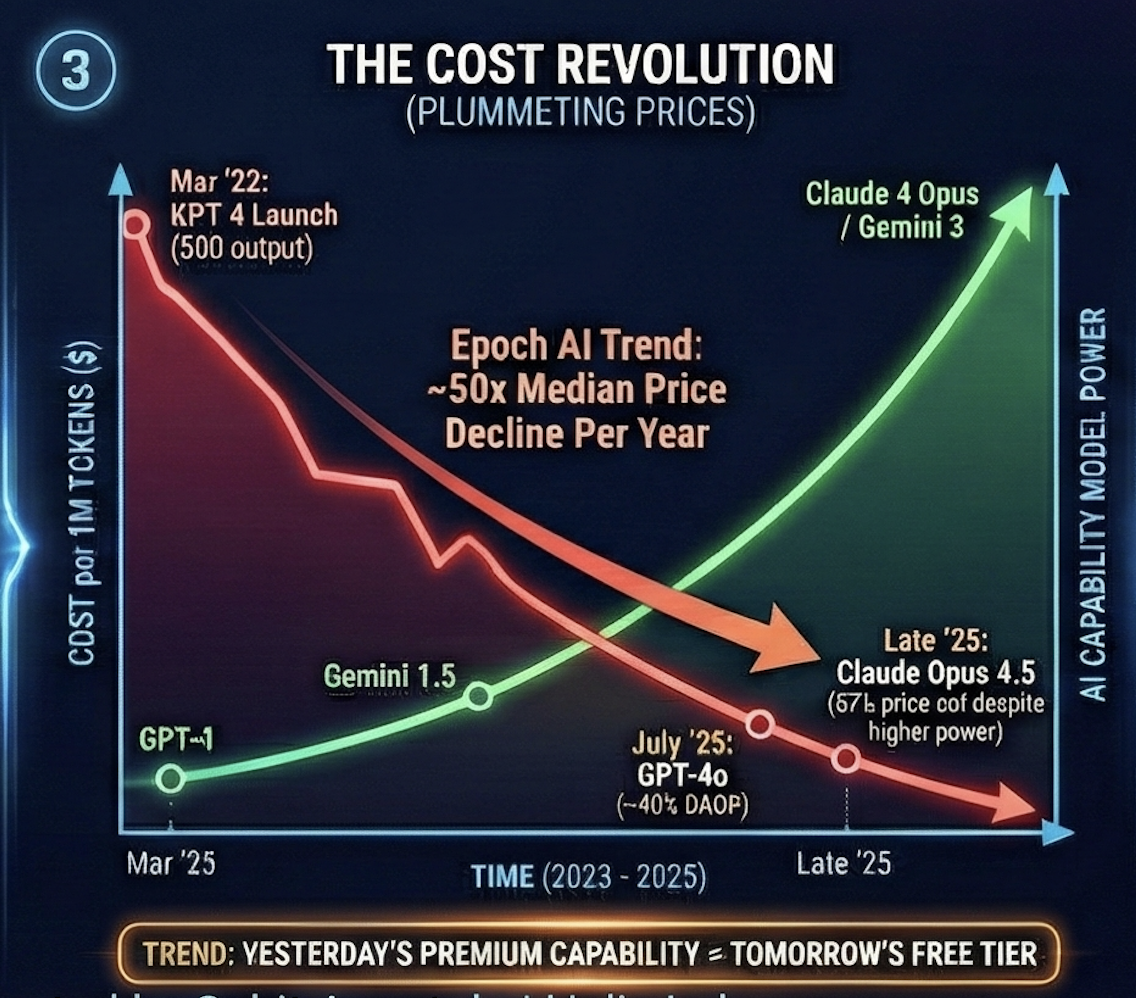

The Epoch AI Finding That Changes Everything

Recent research from Epoch AI analyzed AI cost curves from 2020-2025.

The finding?

Median price declines of 50x per year for equivalent AI capability.

GPT-4 output tokens dropped 83% in cost in just 16 months (March 2023 to July 2024).

What cost $100 in 2023 costs $17 today. What costs $17 today will cost $3 by next year.

Your 3-year business case is built on pricing that won't exist by year 2.

Your ROI calculations are fiction. Not because you're bad at math—because the inputs change faster than planning cycles.

What Actually Works

The organizations succeeding with AI aren't the ones with the best predictions.

They're the ones who stopped predicting.

They design for evolution, not prediction.

1. Principles Over Features

Instead of specifying "we need GPT-4 integration by Q3," they define principles:

- "AI assists humans, never replaces judgment"

- "Governance is built in from day one"

- "Architecture is model-agnostic"

Principles don't expire when a new model launches. Features do.

When Claude Opus 4.5 launched last week—67% cheaper with better performance—organizations with principle-based architecture could switch overnight. Others are locked into contracts for tools that are now obsolete.

2. Architecture Over Roadmap

Instead of locking in vendors and models, they build flexible infrastructure:

- Model-agnostic design (swap Claude for GPT for Gemini without rebuilding)

- API abstraction layers (change the AI, keep the workflow)

- Modular capabilities (add video when ready, add voice when useful)

The architecture absorbs evolution. The roadmap fights it.

3. Learning Loops Over Milestones

Instead of "deliver feature X by date Y," they run continuous experiments:

- Build something small

- Test with real users

- Learn what actually matters

- Adapt based on evidence

- Repeat

The goal isn't to deliver what you planned. It's to discover what you need.

This sounds like common sense. It isn't.

It requires abandoning the comfort of fixed plans. It requires telling stakeholders "I don't know exactly what we'll build in 12 months." It requires trusting the process more than the prediction.

Three Questions to Assess Your AI Strategy

Question 1: If a breakthrough AI capability launched tomorrow, how quickly could your architecture use it?

If the answer is "we'd need to rebuild," you have an architecture problem, not an AI problem.

If the answer is "we'd need to renegotiate contracts," you have a procurement problem designed for a slower world.

Question 2: Are your AI plans defined by principles or by specific tools?

"We're building with GPT-4" expires.

"AI augments human judgment" doesn't.

Which statement describes your strategy document?

Question 3: When did you last change your AI strategy based on something you learned?

If it's been more than a quarter, you're not learning fast enough.

If it's been more than a year, your strategy is already fiction.

The Transformation That Actually Matters

Here's the uncomfortable truth:

The organizations that will thrive aren't the ones who predict AI's future correctly.

They're the ones who build the capability to adapt to whatever that future turns out to be.

This isn't about being faster. It's about being flexible.

It's not about having better predictions. It's about needing fewer of them.

The risk isn't moving too fast.

The risk is being locked into yesterday's capabilities while the world moves on.

Every month you wait with a fixed roadmap is a month of better tools you're not using. A month of cost savings you're not capturing. A month of capability your competitors are deploying.

What This Means For You

If you're a leader responsible for AI strategy, ask yourself:

Are you designing for the AI capabilities of January 2025?

Or are you designing for capabilities that don't exist yet—but will by the time you launch?

The difference determines whether you're building the future or building the past.

Three AI breakthroughs launched last week. What launched while you were reading this?

Reach out to Suhit Anantula | suhitanantula.com for your future proof roadmap.